break

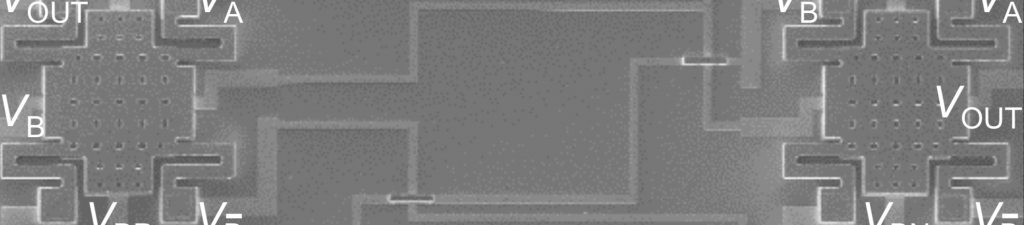

MEMS relay-based integrated logic systems (Liu and Stojanović groups)

Hardware Accelerators for AI

break

Tsu-Jae King Liu, Sayeef Salahuddin, Sophia Shao, Vladimir Stojanović, Eli Yablonovitch

break

The emergence of machine learning and other artificial intelligence applications has been accompanied by a growing need for new hardware architectures. The BETR Center research groups of Professors Tsu-Jae King Liu, Sayeef Salahuddin, Vladimir Stojanović, Laura Waller, Ming Wu, and Eli Yablonovitch are investigating hardware accelerators specialized for large-scale matrix computations used in deep neural networks. The BETR Center also develops new machines for solving difficult combinatorial optimization problems, such as those found in operations research, finance, and circuit design. The conventional von-Neumann computer is ill-suited for these applications in terms of latency and energy efficiency, due to its intrinsic architectural and algorithmic limitations, opening the door for alternative physical systems based on emerging technologies. BETR Center researchers investigate deep neural networks based on nanoelectromechanical relays and other novel switches, architecture-aware network pruning techniques, and analog machines that can solve NP-hard optimization problems without the need for the complexity of quantum bits.

break